with 250000 MAX_EXAMPLES in buildVocab, ran into GPU ERROR → need to figure out to make memory safe model or training ,,

Why Vocabulary-based code causes GPU OOM:

First it builds a custom vocabulary and tokenizes + batches data at runtime like this:

-

Reads all raw text lines into memory (

self.data = [x["text"] for x in dataset]) -

Each

__getitem__dynamically:- Tokenizes the text

- Converts it to indices (

text2idx) - Adds

<SOS>,<EOS>, and pads withpad_collate

-

Each batch can have variable-length inputs, padded up to a

MAX_LEN(e.g., 128) -

The full batch of size

B × L × d_modelis sent to the model on GPU

Now, here’s why OOM happens:

1. Tokenizing on-the-fly eats CPU & RAM

tokenizing and building sequences in real time per batch. This adds CPU load and RAM usage, which indirectly slows down training and can overflow system memory before GPU is even used.

2. vocabulary is large & grows with dataset

If usign a bigger dataset (e.g., OpenWebText), vocabulary grows significantly, e.g., from hundreds to tens of thousands of tokens. This increases:

-

The size of the embedding matrix (

vocab_size × d_model) -

The logits (

B × L × vocab_size) output by the model -

Memory for gradients & attention computations

-

For example, a

vocab_size = 50,000andbatch_size = 64,seq_len = 128,d_model = 512can easily exceed VRAM if multiple layers are used.

3. Longer sequences or examples increase memory quadratically

The attention mechanism has complexity of O(L²):

- A sequence of 128 tokens →

128×128attention matrix per head - With 8 heads and 64 examples =

64 × 8 × 128 × 128→ over 8 million float entries, just for attention weights

If using larger sequences or more examples, it can OOM easily.

Solution: Tokenize and preprocess beforehand

Instead of Vocabulary and text2idx at runtime, switch to:

- Pre-tokenizing dataset (e.g., with

tiktokenortransformers) - Saving tokenized examples to disk

- Loading them in a fixed format (e.g.,

input_idstensors) - Feeding them directly into the model

This allows:

- Efficient batching

- Less CPU/RAM usage

- Faster training

- Less risk of OOM

Still dmodel, batch_size, block_size, affects the memory usage. Need some tries to match the memory capacity.

For models like this, with large datasets, memory is always the problem…

For now

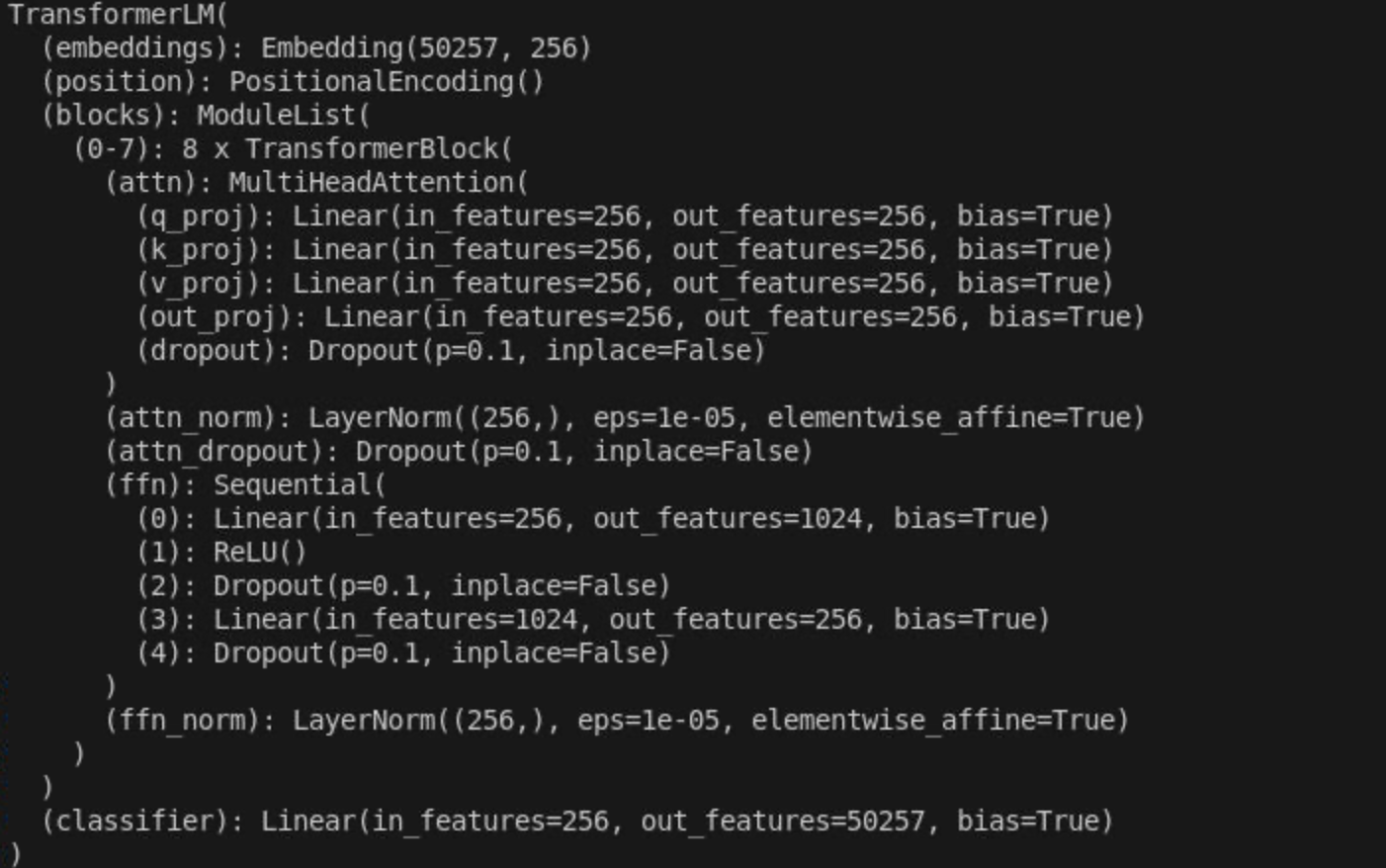

- batch_size = 64

- d_model = 256

- block_size = 256 is working

Try gradient clipping, micro batch

estimated time with pure attention model

is 340 h

Absolutely! Here’s a concise yet thoughtful project folder introduction that reflects your learning journey — from foundational concepts (like Word2Vec and RNNs) to implementing and improving GPT-2 from scratch.

📘 GPT-2 From Scratch: A Learning + Implementation Journey

This project is a hands-on, end-to-end exploration of Large Language Models (LLMs) — starting from the fundamentals of word representation to building and enhancing a full GPT-2 architecture from scratch.

🧭 Objective

The ultimate goal of this project is to:

-

Understand the full stack of LLM components, from embeddings to transformers.

-

Implement GPT-2 from the ground up, without relying on high-level libraries.

-

Incrementally evolve the architecture toward modern, state-of-the-art techniques used in large-scale LLMs.

📚 Learning Path Structure

The folder is organized to reflect a logical progression of topics:

-

Word Representations

Word2Vec,PPMI,SVD,GloVe— classical techniques for capturing semantics.

-

Sequence Models

RNN,RNN with Attention— foundational concepts in handling sequential data.

-

Transformer Core Concepts

Positional Encoding,Self-Attention,Masking— components that power transformer models.

-

Transformer Architecture

Attention is All You Need,Overall Transformer— integrating the building blocks.

-

From Scratch Implementation

- Custom implementation of GPT-2, step-by-step, with clarity and extensibility in mind.

-

Towards SOTA

- Gradual integration of modern features: residual pathways, layer norm variants, FlashAttention, parameter-efficient fine-tuning, and more.

🛠️ Why This Project?

Rather than treating LLMs as black boxes, this project is designed to demystify each layer, line-by-line. By the end of this journey, the aim is not just to use GPT-2, but to own the intuition and code behind it.

Let me know if you want a more casual, academic, or publication-style version of this intro — or if you’d like this split into a dedicated README.md.